As artificial intelligence rapidly evolves, its influence stretches far beyond tech labs and business boardrooms. AI is now entering one of the most delicate and complex areas of human life: mental health. From virtual therapists to emotion-tracking algorithms, technology is being designed to support mental well-being—but not without raising critical concerns.

So, is AI a mental health ally or a digital double-edged sword?

The Positive Potential of AI in Mental Health

AI tools are already being used in groundbreaking ways to identify, manage, and even predict mental health conditions. Here’s how they’re helping:

1. Early Detection and Diagnosis

Machine learning algorithms can analyze speech patterns, facial expressions, and even social media behavior to detect signs of depression, anxiety, or other disorders. For example:

- Natural Language Processing (NLP) can detect changes in tone or vocabulary that might suggest emotional distress.

- AI systems can flag warning signs earlier than traditional methods, offering a chance for timely intervention.

2. Accessible Mental Health Support

Not everyone has access to a licensed therapist. AI-powered chatbots like Woebot or Wysa aim to fill that gap by offering:

- 24/7 conversational support

- Cognitive Behavioral Therapy (CBT) techniques

- Journaling prompts and mood tracking

While not a replacement for human therapy, these tools can be especially valuable in underserved or remote areas.

3. Personalized Treatment Plans

AI can help therapists and psychiatrists develop more tailored treatments by analyzing large datasets. This includes:

- Predicting which medications might work best based on genetic or behavioral profiles

- Tracking progress and adjusting strategies in real time

The Darker Side: Risks and Ethical Concerns

Despite the benefits, there are significant drawbacks and dangers to using AI in mental health care.

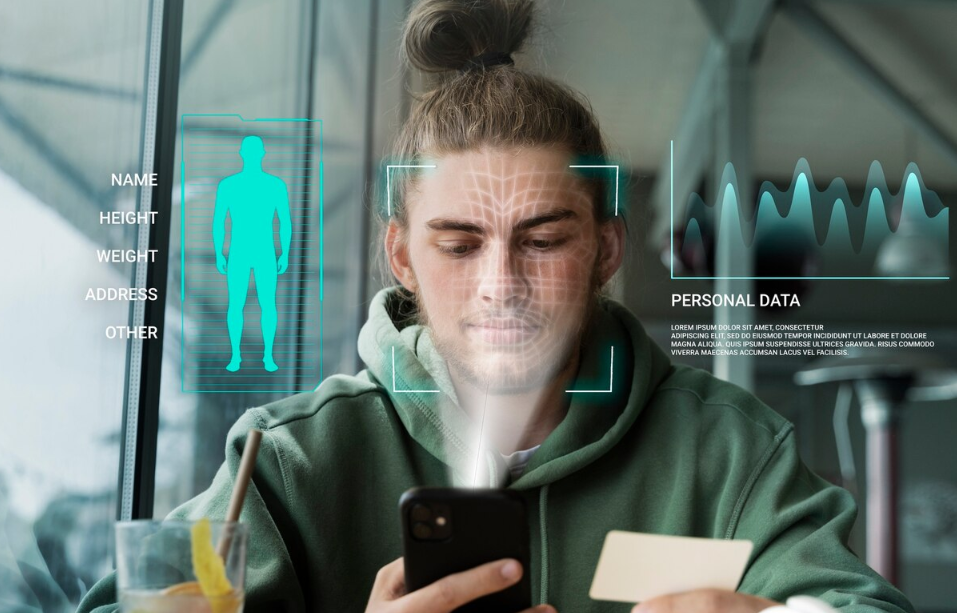

1. Privacy and Data Security

Mental health data is among the most sensitive personal information. When apps and AI systems collect this data:

- Who owns it?

- How is it stored and protected?

- Could it be misused by insurers, employers, or advertisers?

Without strong regulations, users could face serious violations of their privacy.

2. Over-Reliance on AI

AI cannot understand nuance, trauma, or complex human emotions the way a trained therapist can. Relying too heavily on AI:

- May lead to misdiagnosis

- Could cause users to feel isolated or misunderstood

- Risks replacing essential human empathy with scripted responses

3. Bias and Inequality

AI systems are only as good as the data they are trained on. If training data lacks diversity:

- Marginalized groups may be misrepresented or underserved

- Cultural differences in expressing emotion may be misinterpreted

In mental health, these biases can have serious consequences.

A Balanced Future

The goal should not be to replace therapists with robots, but to augment human care with smart tools. With proper safeguards, AI can become a valuable partner in mental wellness. Key steps for a balanced future include:

- Transparent AI development

- Robust privacy protections

- Clinical validation and oversight

- Ethical use of data and algorithms

Final Thoughts

AI has the power to transform mental health care for the better—making support more accessible, personalized, and proactive. But it also carries real risks that must be carefully managed. As we navigate this new frontier, the focus must stay on empathy, ethics, and the irreplaceable value of human connection.